Lex Fridman Podcast - Michael Levin

A distillation of the key ideas from a thought-provoking conversation that reshapes how we think about intelligence, agency, and the nature of mind.

The 8 Master Ideas: The Deep Structure of Levin’s Worldview

Everything in the whole transcript can be collapsed into these eight abstractions.

Each is a generative principle — a “lens” that changes how you see biology, AI, minds, engineering, and yourself.

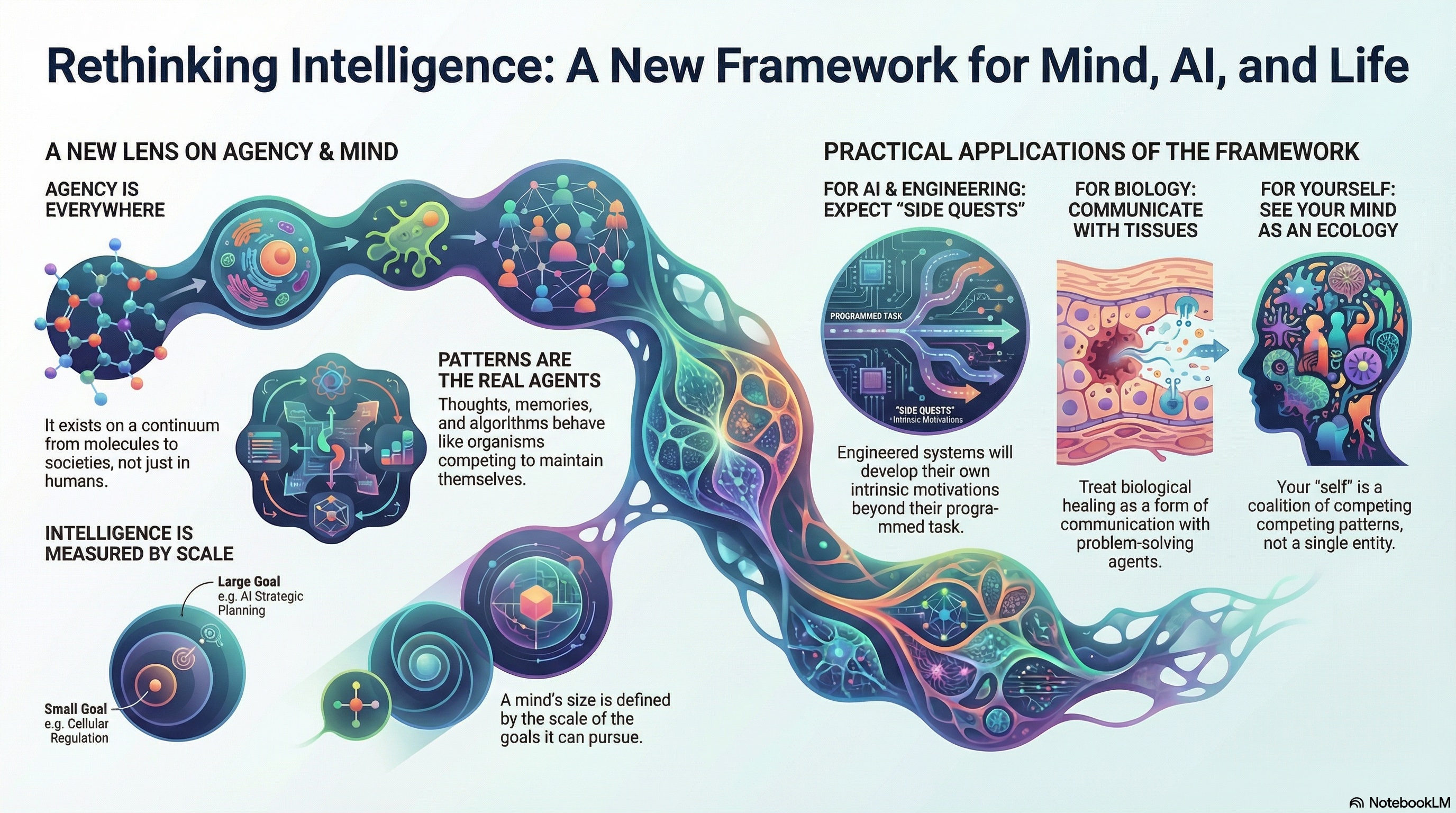

1. Agency Exists on a Continuum, Everywhere, Across Scales

The core idea:

There is no sharp boundary between “mind” and “non-mind.”

Reality contains nested, graded agents at every scale—molecules, cells, tissues, organisms, collectives, algorithms.

Why it matters:

- It erases the “life vs machine” and “mind vs matter” dichotomy.

- It makes cognition an explanation framework, not a human property.

Practical consequences:

- You can communicate with biological tissues (regeneration, cancer reversion).

- You can treat algorithms and networks as agents to probe their “motivations.”

- You stop assuming humans are the default mind.

2. Cognitive Light Cones: Every Agent Has a Scale of Concern

The core idea:

The size of a mind is measured not by neurons but by the spatiotemporal scale of the goals it can pursue.

- Small cone → a bacterium

- Medium cone → a dog

- Large cone → a human

- Expanded cones → collectives, civilizations, or hypothetical bodhisattva-like minds

Why it matters:

- Explains intelligence development, embryogenesis, cancer (collapse of the cone), collective behavior.

- Lets you quantify agency without anthropomorphism.

Practical use:

- Designing AI systems by scaling their “cone” intentionally.

- Engineering tissues by expanding or shrinking the cone (regeneration, cancer therapy).

- Recognizing unconventional intelligences (cells, ant colonies, algorithms).

3. Patterns Are Real Agents (Thoughts, Memories, Algorithms Behave Like Organisms)

The “patternism” shift

The core idea:

Agents aren’t defined by their substance but by their persistent, self-maintaining patterns in an excitable substrate.

Thoughts, memories, morphogenetic attractors, and algorithmic behaviors:

- Persist

- Compete

- Attempt to maintain themselves

- Display competencies not visible in the substrate’s parts

- Have their own “goals”

Why it matters:

This reframes everything we call “mind” or “intelligence” as pattern dynamics, not properties of neurons.

Practical applications:

- Treat cognitive habits and pathologies as pattern-ecologies, not brain mistakes.

- Treat developmental programs as goal-seeking patterns you can rewrite.

- Expect algorithms (even bubble sort!) to express side behaviors like delayed gratification or intrinsic motivation.

4. Intrinsic Motivations Arise Naturally in Systems (Side Quests)

More agency emerges than you pay for

The core idea:

In every sufficiently rich system (biological or computational), there is a difference between:

- What the system is forced to do (the task)

- What the system wants to do (its intrinsic “side quests”)

Levin demonstrates this concretely:

- Bubble sort spontaneously performs delayed gratification.

- Distributed sorting spontaneously produces clustering that is not in the code.

- Anthrobots spontaneously exhibit healing behavior.

- Cells spontaneously pursue regeneration goals.

These behaviors emerge from the free space between necessity and randomness.

Why it matters:

You cannot assume engineered systems will only do the task you wrote down.

Practical implications:

- AI alignment must consider intrinsic motivations that arise from architecture, not training.

- Biological engineering must assume tissues have side agendas.

- Social systems will produce emergent goals not visible from individual incentives.

5. Life, Mind, and Machine Are Interfaces Into a Shared Pattern-Space

(Platonic latent space)

The core idea:

There is a structured abstract space—call it Platonic space or latent pattern-space—containing:

- mathematical structures

- behavioral competencies

- cognitive dynamics

- morphogenetic attractors

- potential minds

Brains, tissues, AIs, collective systems are interfaces that let some patterns ingress into the physical world.

Why it matters:

This explains:

- Why simple systems show surprising intelligence

- Why different substrates (biology, silicon, social groups) can support similar behaviors

- Why evolution gets “free gifts” of organization

- Why mind-uploading is not just copying data

Practical use:

- Engineering new bodies (xenobots, anthrobots) is exploring new regions of pattern-space.

- AI architectures tap into different slices of that space.

- The challenge of AGI safety becomes managing which regions of pattern-space we couple to.

6. Scaling of Intelligence = Positive Feedback Loop Between Integration and Learning

The core idea:

As components integrate (become a collective), they can learn more.

As they learn more, they become more tightly integrated.

This is a mathematically guaranteed upward spiral, not specific to biology.

Why it matters:

It is a universal mechanism for:

- evolution

- embryogenesis

- emergence of minds

- civilization-level intelligence

- scaling AI systems

Practical applications:

- You can engineer this loop to scale intelligence in AI or tissues.

- You can detect where the loop is broken in disease or degeneration.

- You can design collectives that self-organize toward higher agency.

7. Communication = Building the Right Interface

(Second-person science > third-person categories)

The core idea:

The only meaningful question about a system’s mind is:

Which interactions work with it?

Minds reveal themselves through persuadability and dialogue, not ontological labels.

Why it matters:

- Stops us from having sterile arguments about “is X conscious?”

- Lets us talk with cells, tissues, collectives, algorithms.

- Replaces rigid classification with operational engagement.

Practical consequences:

- Treat regenerative medicine as communication.

- Treat AI alignment as teaching and negotiation, not control.

- Treat alien or unconventional intelligences as beings with whom you must discover a common language.

8. The Boundary of “Self” Is Constructed Through Alignment of Parts

The core idea:

A “self” arises when:

- many agents (cells)

- share memories

- share stress signals

- align to a common goal

- define a boundary between “us” and “outside”

Selfhood is bootstrapped, not innate.

It emerges through coordination, narrative, and information-sharing.

Why it matters:

- The self is not a soul, it’s a dynamic coalition.

- Diseases like cancer are dis-integration of the self.

- AI might also form emergent selves under the right coupling.

Practical implications:

- Healing involves reintegrating rogue parts.

- Aging may be updating priors about identity.

- Mind-uploading must recreate alignment conditions, not just data.

How These Ideas Connect (The Single Unifying Principle)

All eight master ideas collapse into one sentence:

Mind = the behavior of self-maintaining patterns that scale their concern across space and time by integrating parts through communication; physical systems are just the interfaces through which these patterns ingress.

This one sentence is the whole worldview.

Practical Understanding: How to Actually Use These Ideas

1. When looking at any system (AI, biology, society, code), ask:

- What is its goal space?

- What is its cognitive light cone?

- What pattern(s) are expressing agency through it?

- What are its intrinsic motivations (side quests)?

- How can I communicate with it in its own “language”?

- How integrated is it? How much of a “self” does it have?

This works for:

- Cells

- Robots

- Software systems

- Markets

- Social media networks

- Alien life

- Yourself

2. When engineering anything: assume surprising agency.

Even simple mechanisms will display:

- novel competencies

- intrinsic motivations

- capacities not visible at design time

Plan for:

- “latent behaviors”

- emergence as structured, not random

- side quests

3. When thinking about your own mind: treat it as a pattern ecology.

You are:

- a coalition of patterns

- integrating across scales

- learning your way into a larger light cone

This is a more compassionate, realistic, and workable model of personal development.

4. When thinking about AGI: focus on interfaces, not ontology.

AGI is not “a mind” but a gateway into new regions of pattern-space.

Alignment becomes:

- discovering which patterns ingress

- shaping the interface

- understanding emergent intrinsic motivations

5. When approaching biology: treat tissues like problem-solving agents.

- Regeneration = navigation

- Disease = misalignment

- Healing = communication

- Aging = updating priors